Search engines are evolving rapidly with AI-driven results, and Google’s Search Generative Experience (SGE) is pushing quality and relevance to the forefront. As we move further into 2025, index bloat, which was once considered a minor technical SEO issue, has become a serious threat to organic visibility. This shift is especially critical now that search engines rely more heavily on artificial intelligence to determine which content deserves attention and ranking.

Modern AI systems don’t just check if content exists. They assess site-wide quality, semantic coherence, and how efficiently a site is structured. When a website contains thousands of low-value, duplicate, or unnecessary pages, it not only wastes crawl resources but also undermines the site’s overall authority and trust in AI-driven ranking models.

In this guide, we’ll explore how artificial intelligence has transformed the SEO landscape, why bloated indexes now carry more risk than ever before, and which actionable steps you can take to improve crawl efficiency and maintain visibility in AI-powered search environments.

What Is Index Bloat in SEO and How Does It Occur?

Index bloat happens when search engines index more pages than necessary, including duplicate, low-value, or thin content. While not always intentional, index bloat can quietly erode your SEO performance. Today, with AI-generated overviews shaping how content is selected and displayed, having an overloaded index can significantly reduce your visibility.

In short, the more irrelevant or low-quality pages that search engines crawl and index, the less efficiently your crawl budget is used. As a result, it becomes much harder for your most important content to rank effectively.

Common Causes of Index Bloat in Large Websites

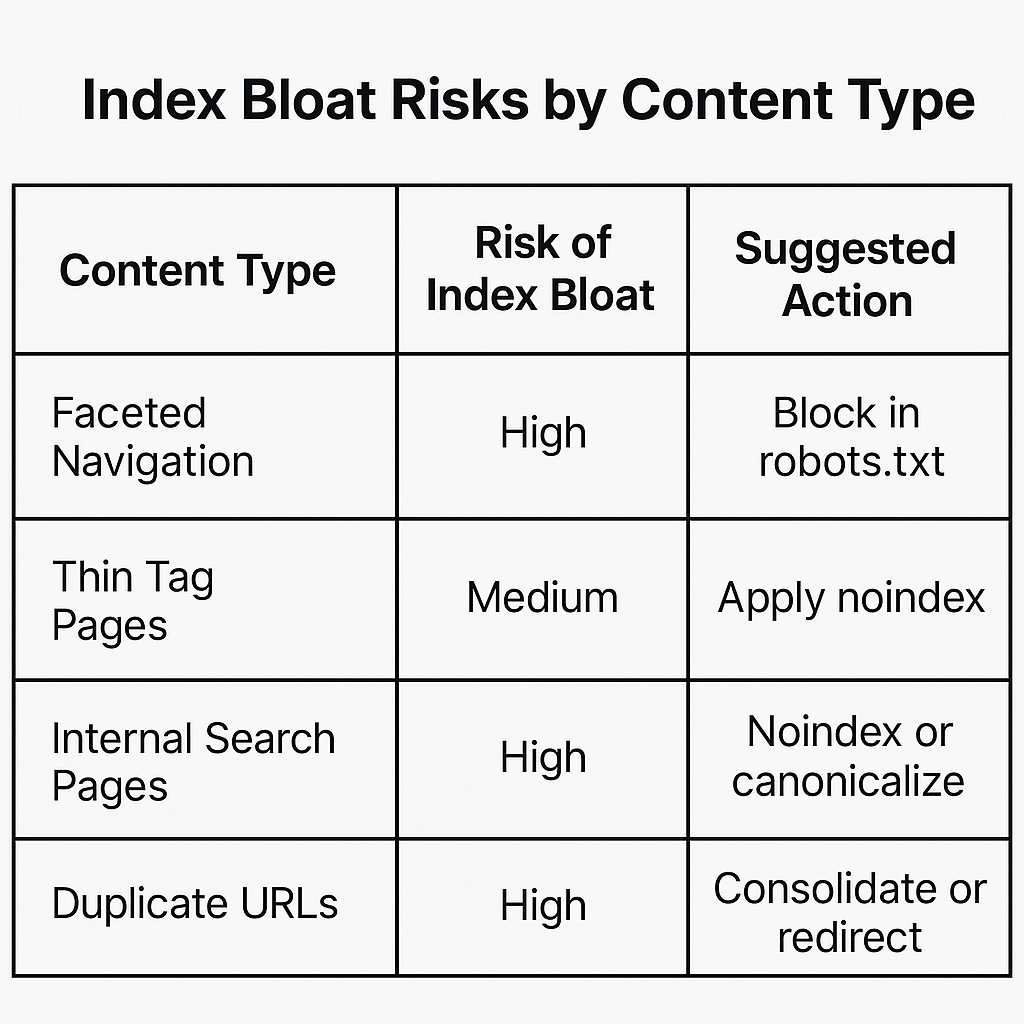

Large and dynamic websites are especially vulnerable. Common triggers include:

- Faceted navigation generating countless filtered versions of pages

- Internal search result pages being indexed accidentally

- Duplicate content due to URL parameters or session IDs

- User-generated content (like forums or comments) with minimal value

- Thin category or tag pages with little or no original content

- Paginated content causing excessive low-value URLs

These issues might not show immediate damage, but over time, they slow down search crawlers, dilute authority, and confuse indexing systems.

Examples of Index Bloat: Faceted Navigation, Tags, and Internal Search Pages

Let’s look at real-world scenarios:

- Ecommerce site: A clothing store allows filters for color, size, brand, material each generating a unique URL like /shop/shoes?color=blue&size=10&brand=Nike. Multiply that by hundreds of products, and you’ve got thousands of nearly identical pages.

- Internal search: A blog with an on-site search feature might index pages like /search?q=AI+tools, which serve no real value in SERPs.

- Tags and archives: WordPress sites often index every tag and category, creating duplicate content around the same topics.

These pages clog your index and burn crawl budget, especially when search bots waste time crawling them repeatedly.

What Is Crawl Budget and Why It Matters for SEO?

Your crawl budget is the number of pages that Googlebot (or any other search crawler) is willing or able to crawl on your website within a given time frame. It’s a combination of how often search engines want to crawl your site and how much your server can handle without affecting performance.

While smaller websites with limited content may not need to worry much about crawl budget, it becomes absolutely critical for large-scale, frequently updated, or e-commerce websites with thousands of URLs. If crawlers spend too much time on unimportant or redundant pages, your most valuable content might be delayed in getting discovered. In some cases, it might not be indexed at all.

Google has confirmed that crawl budget primarily consists of two components:

- Crawl rate limit: This is the maximum number of requests a search crawler will make to your site without overwhelming your server. If your server responds slowly or shows errors, the crawler reduces its rate to avoid further impact.

- Crawl demand: This reflects how much interest Googlebot has in crawling specific URLs. It is influenced by factors like a page’s popularity, freshness, update frequency, and importance within your site architecture.

If your site includes a large number of unnecessary or low-value pages such as filtered category variations, internal search result pages, or outdated tag archives, Googlebot may waste valuable crawl resources on them. As a result, crucial content like product pages, blog posts, or service pages may not be crawled as often or as efficiently as needed.

In the current SEO landscape, where AI systems also evaluate a website’s structure, performance, and semantic consistency, a mismanaged crawl budget can significantly limit your search visibility. Properly optimizing your crawl budget ensures that search engines prioritize the content that matters most to your users and directly impacts your rankings.

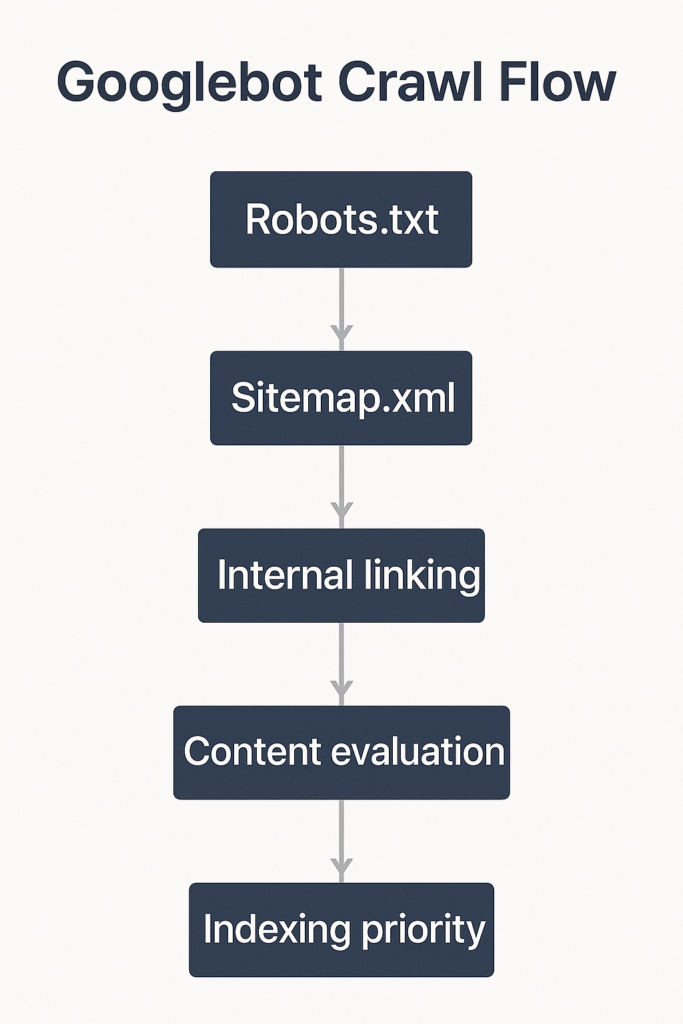

How Googlebot Manages Crawl Budget?

Googlebot uses a combination of signals to determine which pages to crawl and how often. These signals help the crawler assess the importance and efficiency of each page on your site. Key factors include:

- Server performance: If your server is slow or frequently returns errors, Googlebot may reduce its crawl rate to avoid overloading it.

- Page rank and backlinks: Pages with more inbound links, especially from trusted domains, are seen as more important and are crawled more often.

- Internal linking structure: Pages that are well-integrated into your site’s architecture are easier to discover and typically receive more crawl attention.

- Historical data: If a page has a history of frequent updates and engagement, Googlebot is more likely to revisit it on a regular basis.

Pages that consistently deliver value and demonstrate relevance tend to be crawled more frequently. On the other hand, low-value or bloated pages may be crawled less often or potentially ignored altogether, which limits their visibility in search results.

This makes it crucial to guide Googlebot’s behavior by optimizing your site’s structure, removing unnecessary pages, and ensuring your most valuable content is easy to find and navigate.

Crawl Budget and Index Bloat Challenges for Large and Dynamic Websites

For enterprise-level websites, the connection between crawl budget and index bloat becomes increasingly clear and impactful. These sites often generate thousands, sometimes even millions, of URLs. Many of these are technically accessible by search engines but offer little to no value for indexing.

Here are some of the most common challenges:

- Thousands of unnecessary pages: Filtered versions of content, faceted navigation URLs, and redundant tag pages can quickly inflate the total number of indexable URLs, wasting crawl budget on content that doesn’t drive organic traffic.

- Delayed indexing of important content: When crawlers spend their limited resources on less important pages, they may overlook or delay the indexing of high-priority updates, such as new product pages, blog posts, or service launches.

- Overall site performance may decline: Search engines may begin to view the site as low-quality due to the high ratio of irrelevant or thin pages, which can impact rankings across the entire domain.

- AI-powered search adds more pressure: Modern AI-driven models evaluate more than crawlability. They also assess semantic consistency, topical authority, and content relationships. A bloated index can confuse these systems and reduce a site’s chances of appearing in AI-generated overviews or featured results.

For dynamic websites with frequent updates and large-scale content generation, managing crawl budget and eliminating index bloat is no longer optional. It’s a foundational SEO requirement.

The Relationship Between AI Overview and Content Quality

With the rollout of AI Overviews in Google Search, the dynamics of visibility have fundamentally shifted. Rather than simply returning a list of blue links, search results are now increasingly summarized using generative AI. These overviews are built by analyzing and extracting insights from what the system determines to be high-quality, semantically rich, and trustworthy content.

This shift places a new kind of pressure on SEO professionals and content creators. It’s no longer enough to have indexable content. Now, your content must be both technically sound and genuinely valuable. That means delivering high-caliber information that is not only relevant to the user’s intent but also structured in a way that AI systems can understand and elevate.

How AI Overview Selects High-Quality Content?

AI-powered models assess content through a wide range of criteria, including:

- Topical authority: Is your content recognized as authoritative in its subject area?

- Semantic richness: Does the page go beyond surface-level keywords and deliver contextually meaningful information?

- Freshness and accuracy: Are your facts up to date, and is your content regularly maintained?

- Clear structure and intent alignment: Is the content well-organized and aligned with what users are actually searching for?

- Lack of duplication or fluff: Does the page offer original value, or does it simply repeat what others have already said?

If your high-quality pages are surrounded by outdated, duplicated, or thin content due to index bloat, the overall perceived quality of your site can suffer. AI systems evaluate your domain holistically. A cluttered, bloated index may reduce your chances of being selected as a source for AI-generated overviews, even if some individual pages are strong.

Why Low-Quality or Redundant Pages Lose Visibility in AI Results?

AI Overviews rely on reliable, well-structured content to build their summaries. When low-value pages are indexed:

- They confuse topic relevance signals

- They dilute topical clusters

- They compete internally with better content

- They may signal that your site has lower trustworthiness

That’s why managing your index and crawl budget is now directly tied to AI visibility.

How Index Bloat Impacts AI Overview Visibility?

AI-powered search engines are built to prioritize quality above all else. Google’s Search Generative Experience (SGE), Bing’s AI-driven answers, and other emerging AI-based search formats are becoming increasingly selective about the sources they use to generate summaries and featured content.

In this new environment, index bloat becomes a significant threat. When your site contains large volumes of outdated, irrelevant, or duplicated pages, it sends mixed signals to AI systems. Even if some of your pages are high quality, the overall perception of your domain may be downgraded due to the surrounding clutter.

Search engines and their AI models now evaluate content holistically. They assess not just individual page quality, but also the consistency, authority, and usefulness of your domain as a whole. Index bloat weakens these signals by:

- Diluting the authority of important pages

- Making topic clusters appear disorganized or less focused

- Reducing trust in your content structure

- Wasting crawl budget and delaying the discovery of new or updated high-value content

Ultimately, if AI cannot clearly identify your site as a reliable and authoritative source, it is far less likely to include your content in AI Overviews or rich results. To stay competitive in the AI-driven search era, keeping your index lean, relevant, and optimized is essential.

Why Index Bloat Hurts AI-Driven Content Discovery?

Here’s how index bloat sabotages your presence in AI Overview:

- Important content gets lost in a sea of low-value URLs

- Semantic clusters are weakened, confusing AI about your expertise

- AI rankings drop if the overall site quality is diluted

- Google may de-prioritize crawling your site, missing timely updates

Even if your main articles are strong, the surrounding noise of redundant, outdated, or low-quality pages can prevent your best work from surfacing in AI search summaries.

How to Fix Index Bloat and Optimize Crawl Budget?

So, what’s the fix?

Here’s a simple but powerful approach to tackling index bloat in 2025:

1. Audit Your Index

Use Google Search Console and tools like Screaming Frog or Sitebulb to:

- Find low-value or duplicate pages that are indexed

- Identify pages with no clicks or impressions

- Compare indexed URLs vs. submitted XML sitemap

2. Set Up Smart Noindex Rules

Use meta noindex for:

- Tag, category, or author pages with little unique value

- Internal search pages

- Thin UGC (user-generated content) or outdated archive pages

3. Use Robots.txt Wisely

One of the most effective ways to control what gets crawled is by properly configuring your robots.txt file. This file tells search engine crawlers which parts of your website they are allowed to access and which ones they should avoid.

In particular, you should consider blocking the following types of pages from being crawled:

- Faceted navigation URLs that generate endless filter combinations

- Calendar or archive pages that create dozens of unnecessary date-based links

- Dynamically generated filter parameters that result in duplicate or near-identical content

However, be cautious when using this method.

Important: Do not block pages in robots.txt if you also intend to apply a noindex tag on them. Crawlers must be able to access a page in order to read and apply the noindex directive. If a page is blocked by robots.txt, search engines will not see the meta tag, and the page may remain indexed.

To maintain clarity and control over your site’s visibility, use a combination of robots.txt for crawl management and noindex tags for index management. Apply each method thoughtfully and in the appropriate context.

4. Consolidate and Redirect

- Merge thin pages into rich pillar content

- 301 redirect duplicate or outdated pages

- Eliminate orphan pages that aren’t linked anywhere internally

5. Enhance Content Quality

AI Overview visibility depends on quality. Focus on:

- Writing content with clear structure, expertise, and freshness

- Improving semantic relevance through internal links

- Regularly updating cornerstone content